Decision Support Systems (DSS) have long been critical in helping organizations make informed decisions. With the integration of Artificial Intelligence (AI), these systems have become more dynamic and responsive to complex data environments. Generative AI, which generates insights, predictions, and decisions based on patterns in data, is one of the most transformative developments in this space. However, the effectiveness of AI in enhancing DSS depends on the availability and quality of data.

The Role of Large Volumes of Data in AI and DSS

AI models, including those used in DSS, thrive on data. The more data they are exposed to, the better they can recognize patterns, generate predictions, and adapt to new information. For DSS to be accurate, the AI powering it must be trained on vast, diverse datasets. This allows the system to make well-rounded, generalizable decisions.

Key benefits of large data volumes include:

- Pattern Recognition: AI models need many data points to identify trends, anomalies, and outliers, leading to more accurate predictions.

- Generalization: With larger datasets, models can generalize across different scenarios, making them less likely to overfit to specific cases.

- Adaptability: Continuous streams of large data allow AI to update its understanding, making DSS more adaptive to changing environments.

Data Quality: The Foundation for AI Accuracy

While large volumes of data are essential for training AI systems, the quality of data plays an even more critical role in ensuring the effectiveness and accuracy of AI-powered Decision Support Systems (DSS). High-quality data ensures that the AI model makes reliable, unbiased, and actionable recommendations, which is key to the success of DSS across industries. Poor-quality data, on the other hand, can lead to incorrect predictions, misinformed decisions, and ultimately, loss of trust in the system.

Key Dimensions of Data Quality

For AI to achieve its full potential in DSS, data needs to meet several quality criteria:

- Accuracy

Data accuracy refers to how closely the data reflects the real-world scenario it is supposed to represent. Inaccurate data—whether due to human error, outdated information, or faulty data collection methods—can significantly distort the model’s predictions. Inaccurate data can skew model training, leading to erroneous insights. Models trained on erroneous datasets may generalize poorly, impacting decision-making across the system. - Completeness

Data completeness ensures that all necessary data points are available. Missing data, especially in large datasets, can introduce gaps in understanding, which can reduce the model’s ability to make accurate predictions. Incomplete data can lead to biased predictions or inaccurate models, as the AI may misinterpret the gaps or fill them with incorrect assumptions. - Consistency

Data consistency refers to maintaining uniform formats, standards, and definitions across datasets. Inconsistent data—such as one system recording dates as “MM/DD/YYYY” and another as “DD/MM/YYYY”—can cause confusion in the training process and negatively affect the AI model’s ability to process information uniformly. Consistent data ensures that different parts of a DSS can communicate seamlessly and derive the correct results. Inconsistencies within data can confuse models, leading to errors during training, interpretation, and inference phases, ultimately reducing decision accuracy. - Relevance

Data must be relevant to the problem the AI system is trying to solve. Irrelevant or outdated data can skew AI models and lead to poor decision-making. Irrelevant data dilutes the model’s ability to learn from meaningful patterns, potentially leading to misaligned or off-target predictions. - Timeliness

Timely data ensures that the AI system is using up-to-date information, which is especially critical for real-time decision-making. Stale or outdated data can make a DSS reactive instead of proactive, limiting its ability to provide valuable insights. Time-sensitive models, such as those used in financial trading or emergency response systems, rely heavily on the freshness of data. Delayed or outdated data can lead to critical errors in decision-making.

The Impact of Poor Data Quality on AI-Driven DSS

The consequences of poor data quality can be severe, particularly in AI-driven DSS:

- Bias and Skewed Predictions: If an AI system is trained on biased data or data that lacks diversity, the model may develop inherent biases. For example, if a DSS for recruitment is trained primarily on data from male applicants, it may undervalue female candidates, leading to biased hiring decisions. Managing data bias is a critical concern, and ensuring diverse, high-quality datasets is essential for AI fairness.

- Overfitting and Underfitting: Poor-quality data can also lead to overfitting or underfitting in machine learning models. Overfitting occurs when the model becomes too specific to the training data, leading to poor generalization in real-world scenarios. Underfitting, on the other hand, happens when the model fails to capture the underlying patterns due to insufficient data quality or volume.

- Incorrect Decision-Making: Inaccurate or incomplete data directly impacts the decision-making capability of DSS. Whether it’s recommending the wrong course of treatment in healthcare or suggesting unprofitable investments in finance, poor data quality can result in costly mistakes.

Ensuring Data Quality for DSS and AI Systems

To ensure data quality, Organizations must establish processes and strategies that address these key aspects:

- Data Cleaning: This involves identifying and correcting errors in datasets, such as removing duplicate entries, correcting inaccuracies, and handling missing values. Automated data-cleaning tools can streamline this process, ensuring that AI models only process clean, high-quality data.

- Data Validation: Validating the data before it is used in AI models ensures that it meets all necessary quality standards. This includes verifying data sources, checking for consistency, and ensuring relevance. Data validation can be automated through scripts or integrated into data pipelines.

- Continuous Monitoring and Feedback: Data quality should not be a one-time check but rather an ongoing process. Implementing continuous monitoring systems that track data quality across the lifecycle of AI models allows organizations to identify and address issues in real-time. Feedback loops are essential for maintaining data integrity and ensuring AI models evolve with high-quality data.

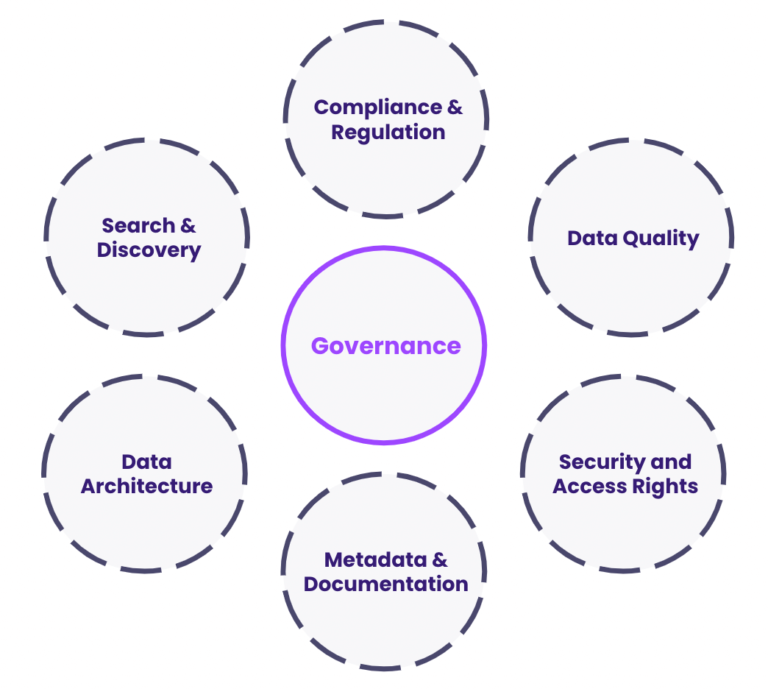

- Data Governance Frameworks: Organizations should adopt data governance frameworks that establish rules, roles, and responsibilities for data management. These frameworks ensure accountability and provide guidelines for maintaining data quality across the enterprise.

- Training Data Sourcing: Given the importance of both quantity and quality, organizations must carefully curate and source training datasets